Bio

Huiyuan Yang is an Assistant Professor in the Department of Computer Science at the Missouri S&T. Prior to this role, he was a Postdoctoral Research Associate at Rice University, where he worked with Prof. Akane Sano

in both the Computational Wellbeing Group

and Scalable Health Labs.

He received his PhD in Computer Science from Binghamton Univeristy-SUNY in 2021, where he was advised by Prof.Lijun Yin.

He received his Master degree from Chinese Academy of Sciences, Beijing in 2014,

and B.E from Wuhan University in 2011.

His work has appeared in top-tier venues including CVPR, ICCV, Transactions on Affective Computing, ACM MM and others. He has been a PC member/reviewer

for NeurIPS, ICLR, KDD, IJCAI, AAAI, AISTATS, ACM MM Asia, ACII, FG and others. He is also an active reviewer for journals such as T-AFFC,

T-MM, T-CSVT, T-IP, T-PAMI, SIVP, ACM-TOMM, AIHC, IMAGE, IMAVIS, JEI, PR, PRL, MMSJ, NCAA and many others.

He has been co-organizer for the 3DFAW-2019 Challenge and workshop (ICCV 2019), AAAI 2022 Workshop on Human

Centric Self-supervised Learning (AAAI 2022), and leading organizer for the workshop of towards multimodal wearable

signals for stress detection (EMBC 2022). He won an award for Excellence in Research from the department of computer

science, Binghamton University 2021, and also Binghamton University Distinguished Dissertation Award (2021).

He also co-released several popular multimodal facial datasets, including BU-EEG, 3DFAW, BP4D+ and BP4D++.

Selected Publications

2023

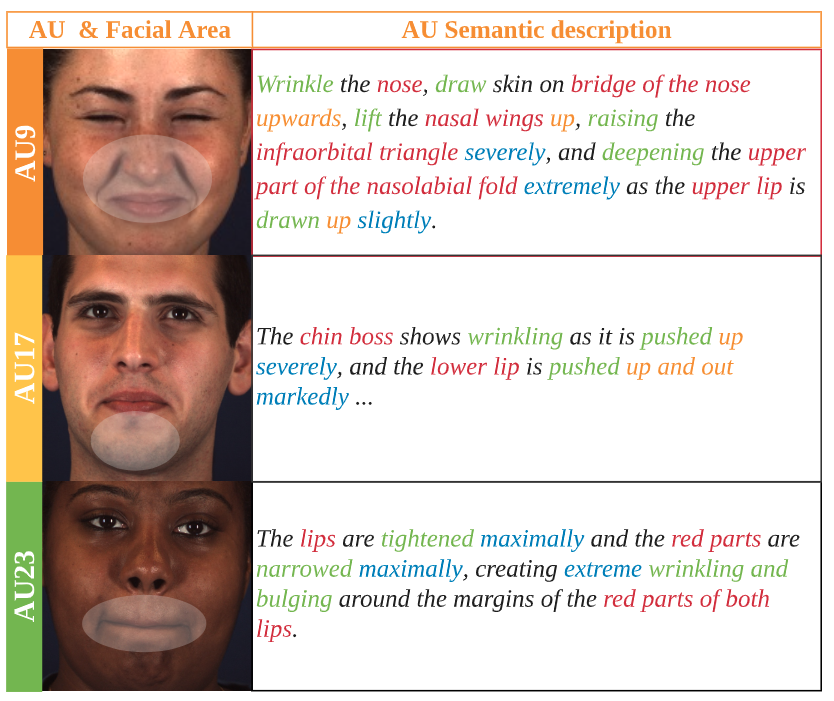

Disagreement Matters: Exploring Internal Diversification for Redundant Attention in Generic Facial Action Analysis

IEEE Transactions on Affective Computing

Xiaotian Li, Zheng Zhang, Xiang Zhang, Taoyue Wang, Zhihua Li, Huiyuan Yang, Umur Ciftci,Qiang Ji, Jeffrey Cohn,

and Lijun Yin

Paper

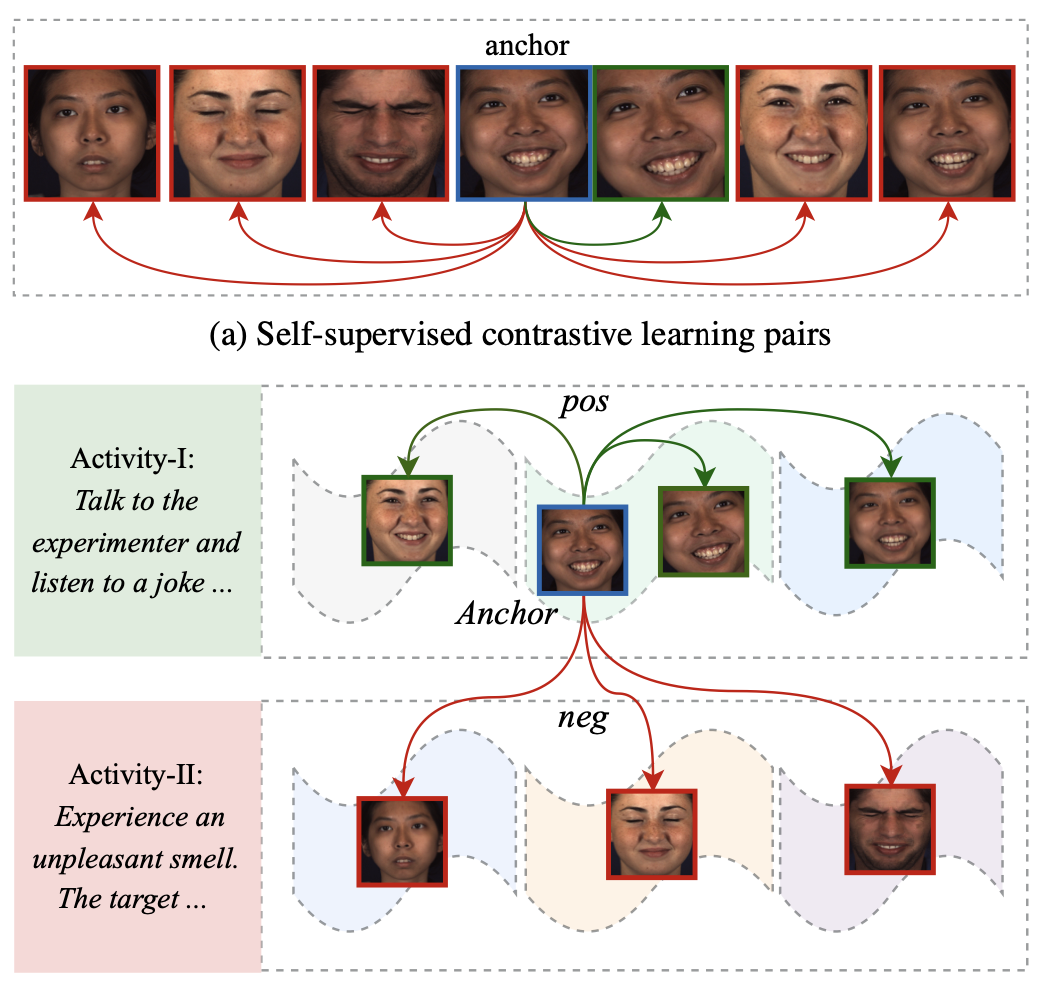

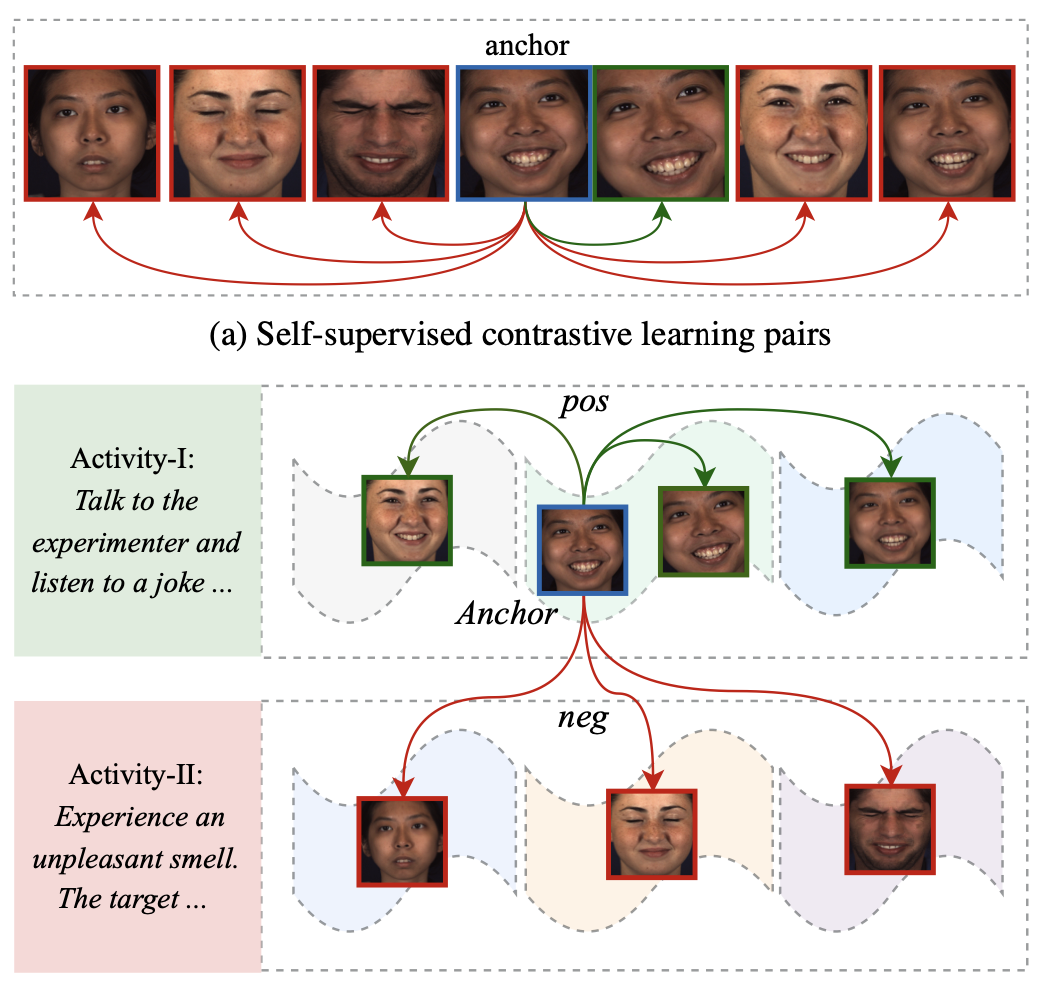

Weakly-Supervised Text-driven Contrastive Learning for Facial Behavior Understanding

IEEE/CVF International Conference on Computer Vision (ICCV 2023)

Xiang Zhang, Xiaotian Li, Taoyue Wang, Huiyuan Yang, Lijun Yin

arXiv

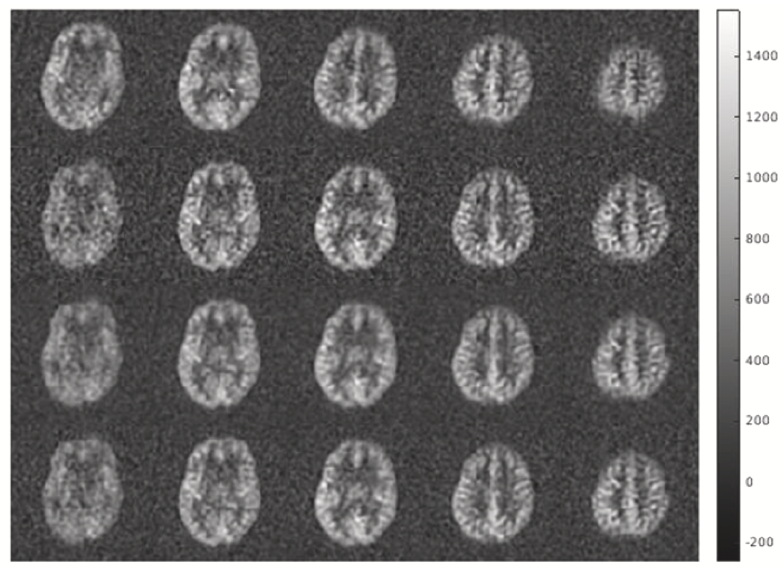

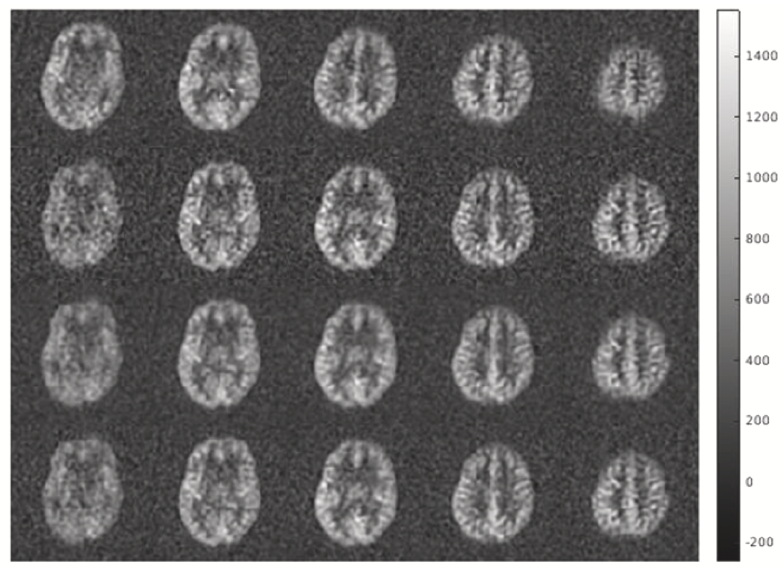

Affine image registration of arterial spin labeling MRI using deep learning networks

Journal of NeuroImage (NeuroImage 2023)

Zongpai Zhang, Huiyuan Yang, Yanchen Guo, Nicolas R. Bolo, Matcheri Keshavan, Eve DeRosa, Adam K. Anderson, David. Alsop, Lijun Yin, Weiying Dai

Paper

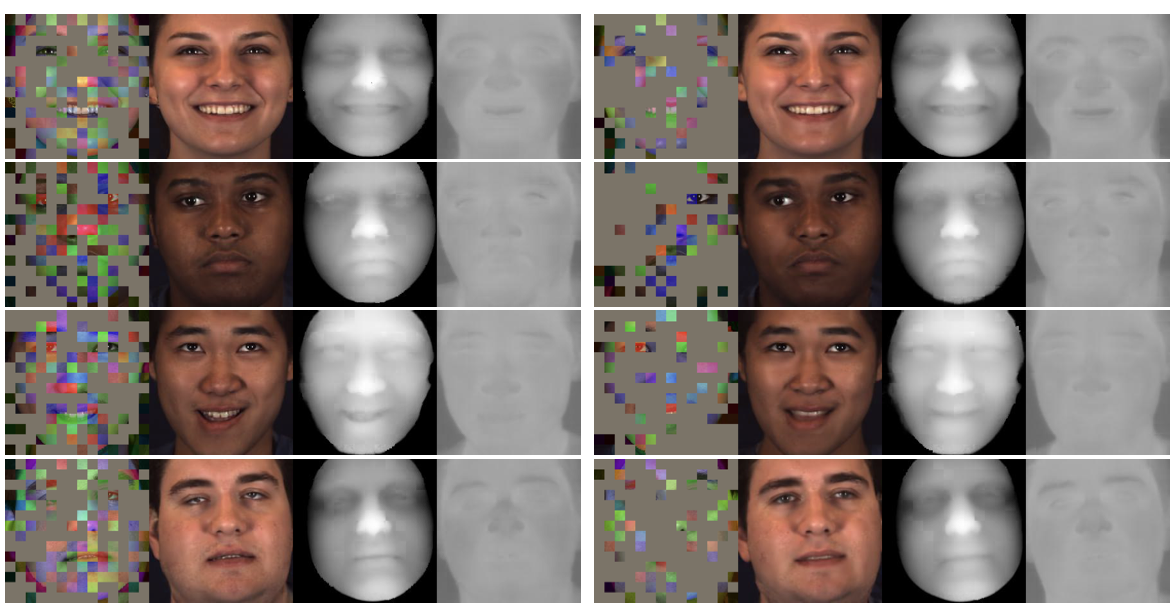

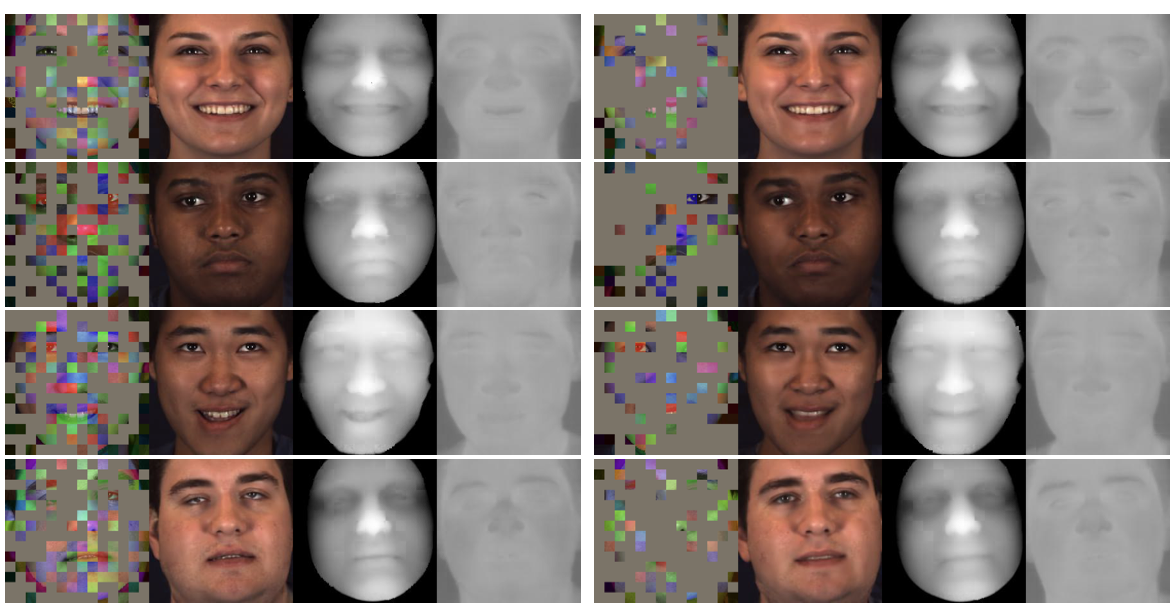

Multimodal Channel-Mixing: Channel and Spatial Masked AutoEncoder on Facial Action Unit Detection

EEE/CVF Winter Conference on Applications of Computer Vision (WACV)

Xiang Zhang, Huiyuan Yang, Taoyue Wang, Xiaotian Li, Lijun Yin

arXiv

2022

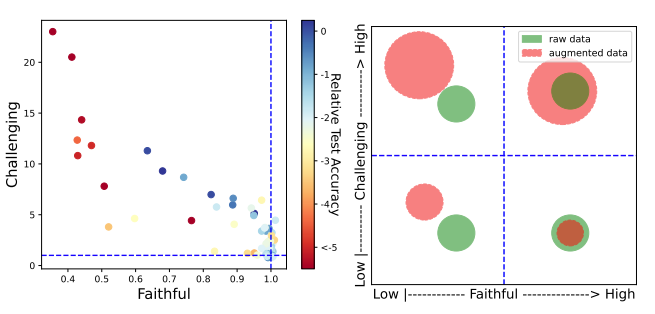

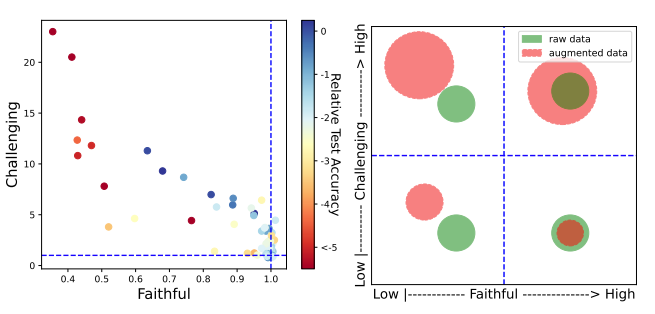

Empirical Evaluation of Data Augmentations for Biobehavioral Time Series Data with Deep Learning

NeurIPS Workshop, 2022

Huiyuan Yang, Han Yu, Akane Sano

Paper

arXiv

GitHub

More to Less (M2L): Enhanced Health Recognition in the Wild with Reduced Modality of Wearable Sensors

The IEEE Engineering in Medicine and Biology Society(EMBC), 2022

Huiyuan Yang, Han Yu, Kusha Sridhar, Thomas Vaessen, Inez Myin-Germeys, Akane Sano.

arXiv

GitHub

2021

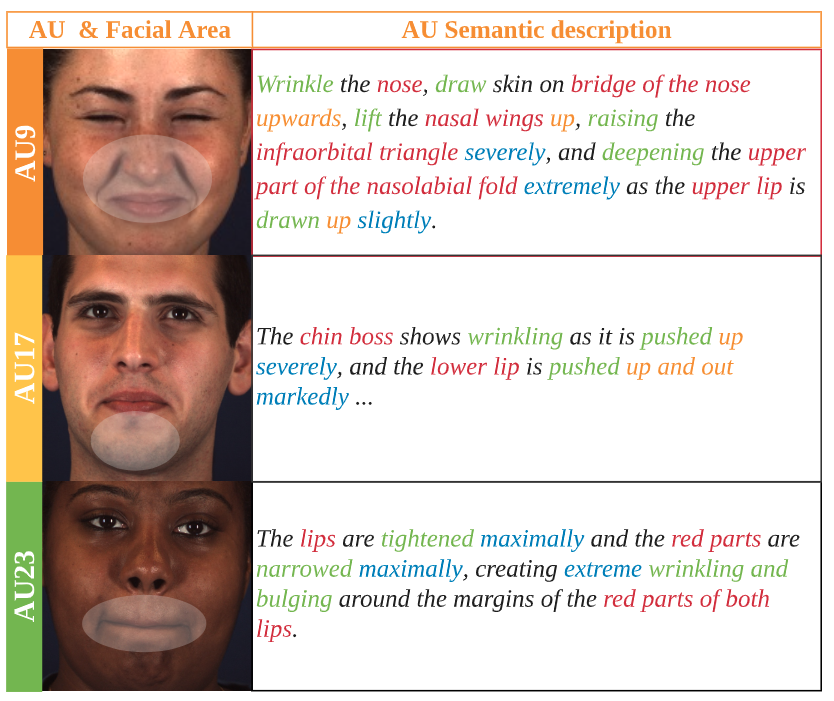

Exploiting Semantic Embedding and Visual Feature for Facial Action Unit Detection

the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021

Huiyuan Yang, Lijun Yin, Yi Zhou and Jiuxiang Gu.

Paper

Supp

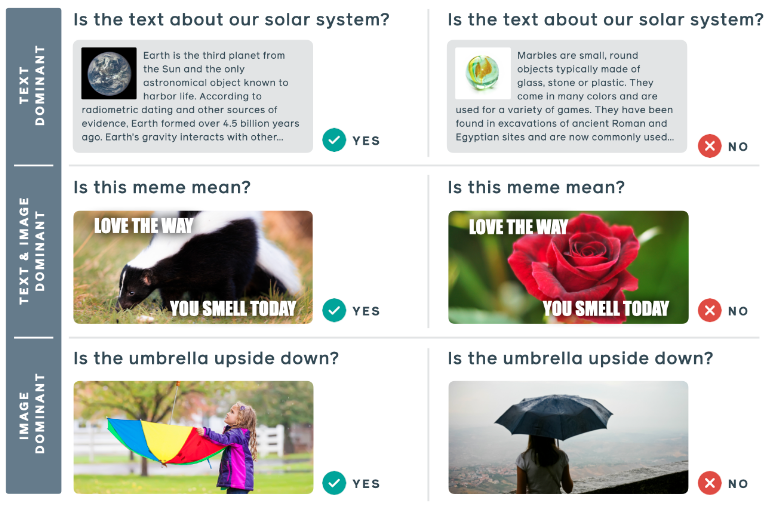

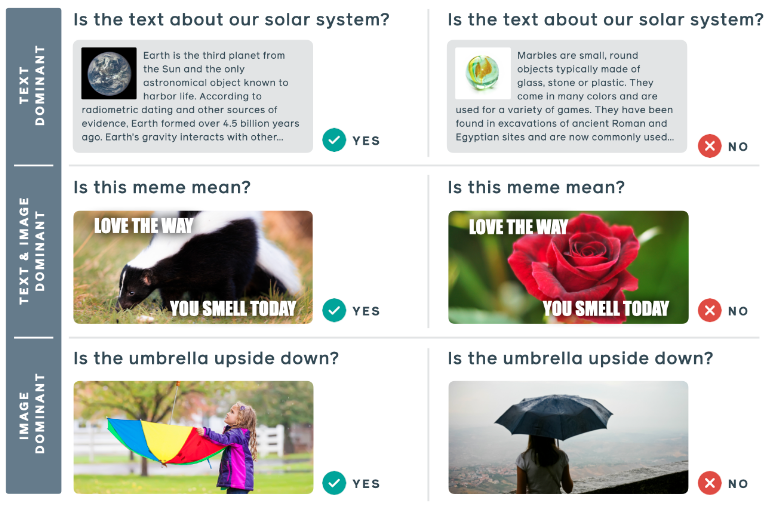

Multimodal Learning for Hateful Memes Detection

IEEE International Conference on Multimedia and Expo (ICME) Workshop 2021 (ICME), 2021

Yi Zhou, Zhenhao Chen, Huiyuan Yang.

Paper

GitHub

2020

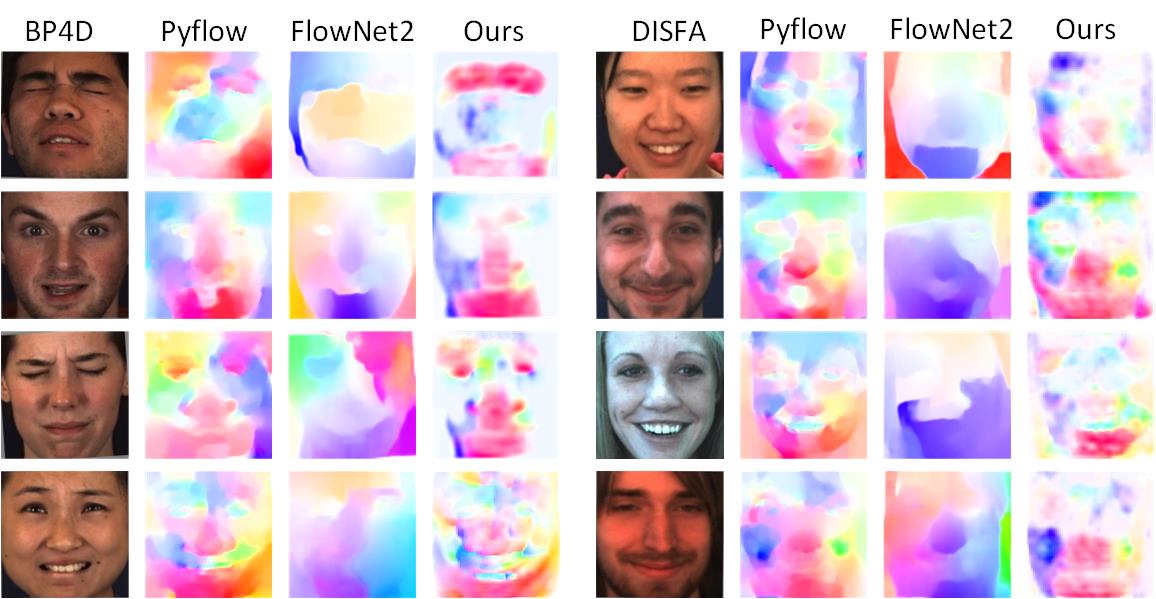

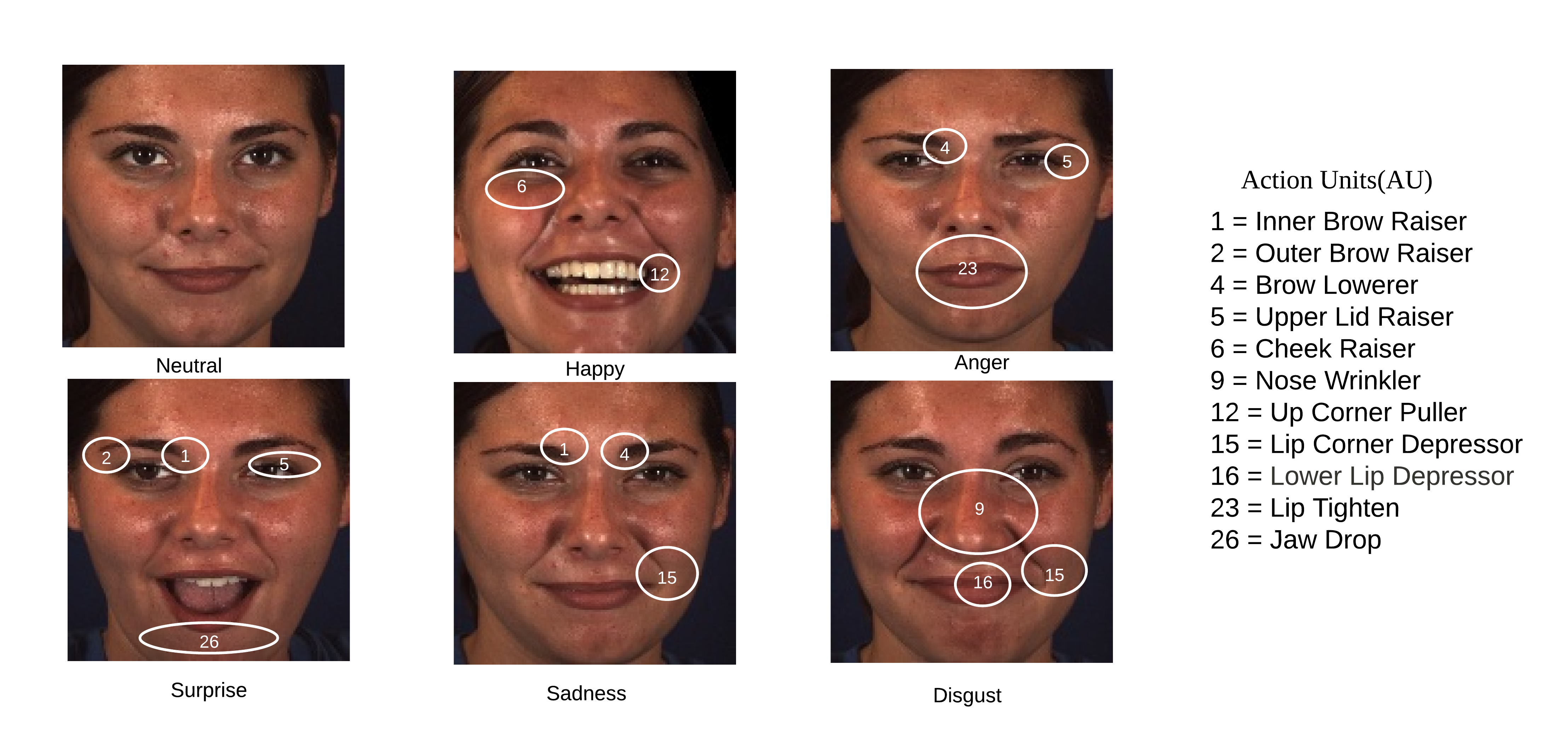

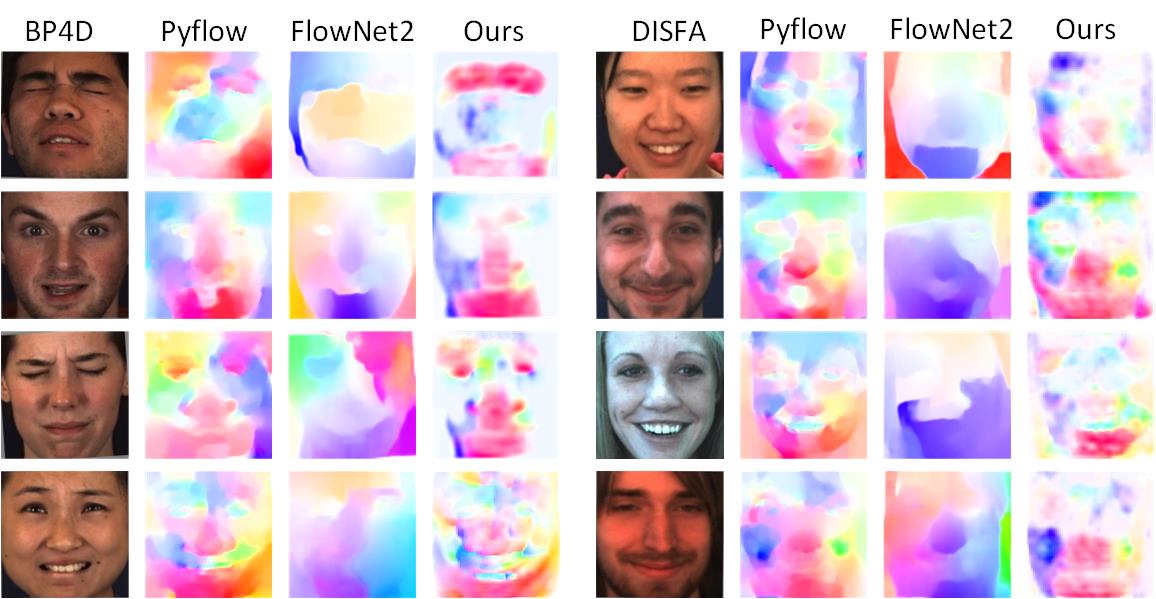

RE-Net:An Relation Embedded Deep Model for Action Unit Detection

Proceedings of the Asian Conference on Computer Vision (ACCV), 2020

Huiyuan Yang and Lijun Yin

Paper

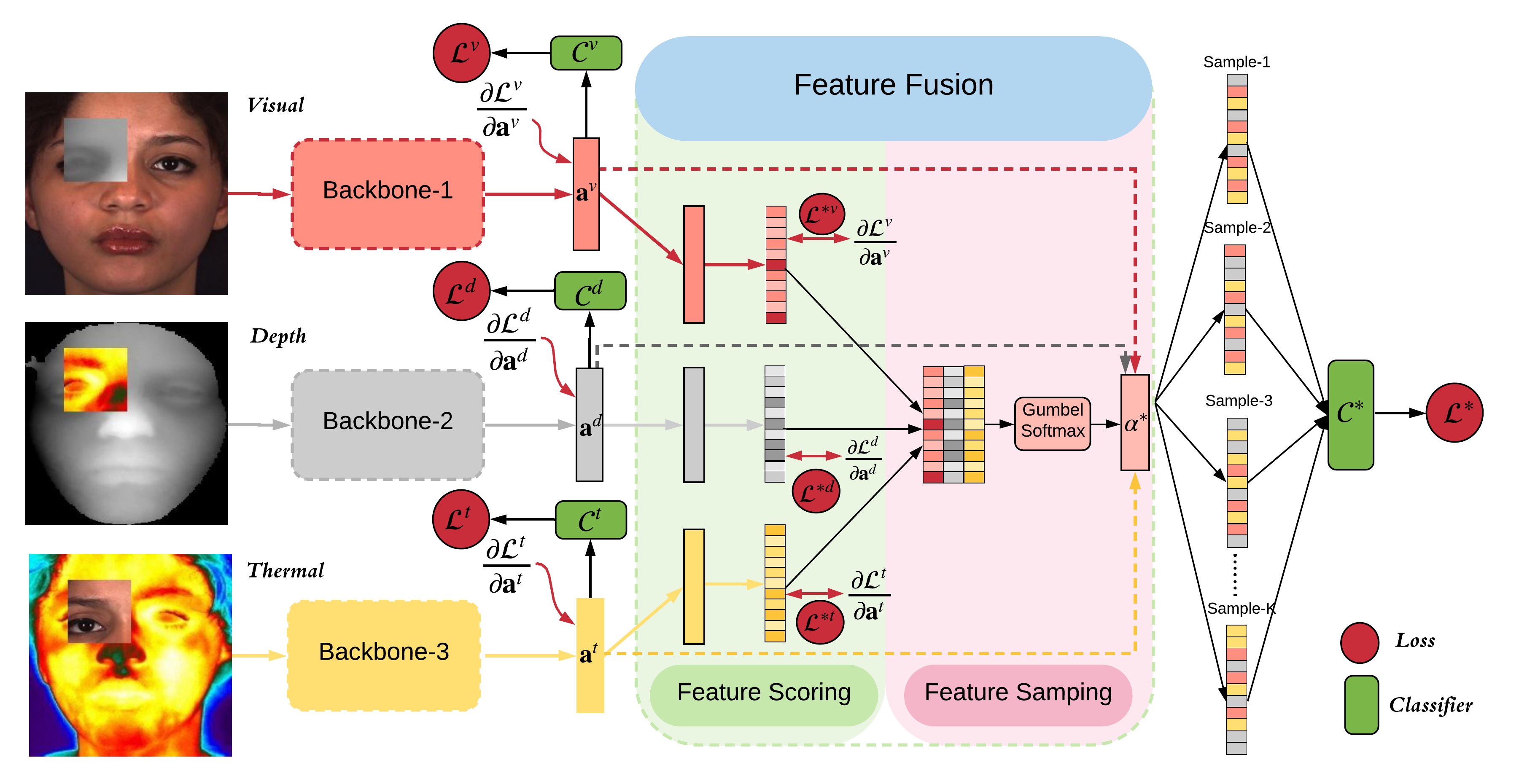

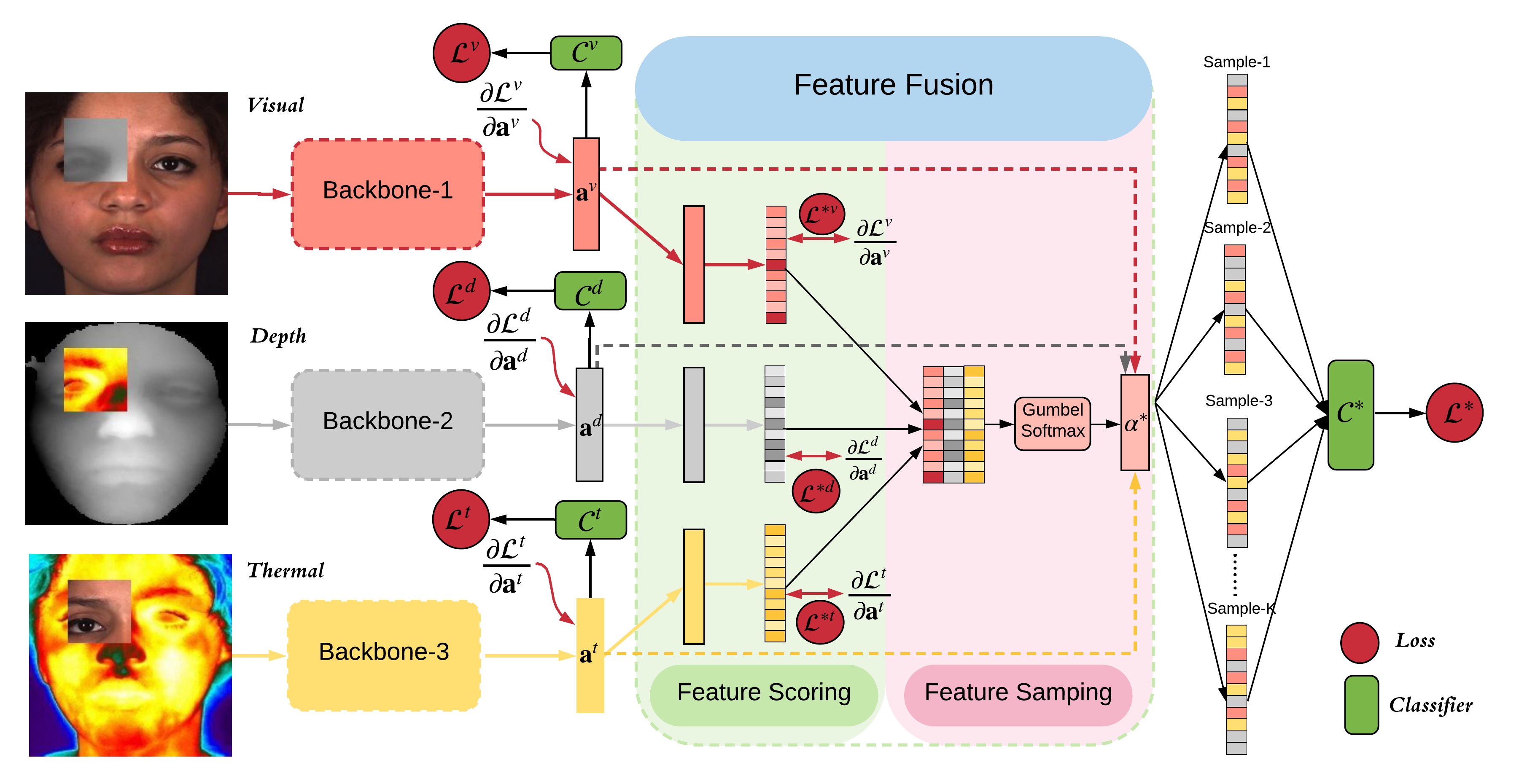

Adaptive Multimodal Fusion for Facial Action Units Recognition

Proceedings of the 28th ACM International Conference on Multimedia (ACM MM), 2020

Huiyuan Yang, Taoyue Wang, Lijun Yin

Paper

Set Operation Aided Network for Action Units Detection

15th IEEE International Conference on Automatic Face and Gesture Recognition (FG), 2020

Huiyuan Yang, Taoyue Wang, Lijun Yin.

Paper

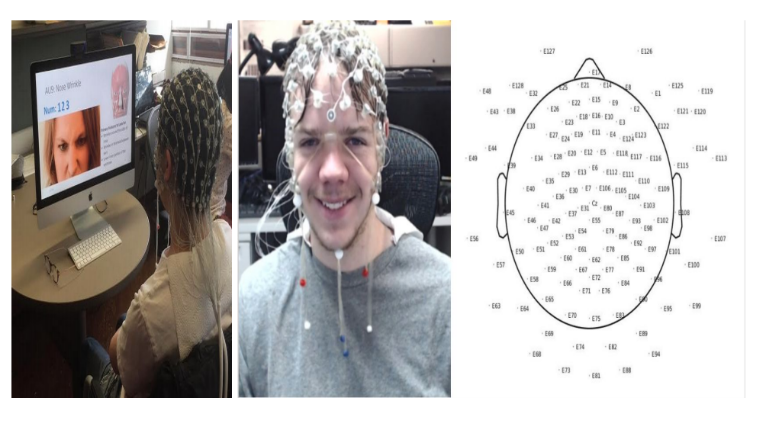

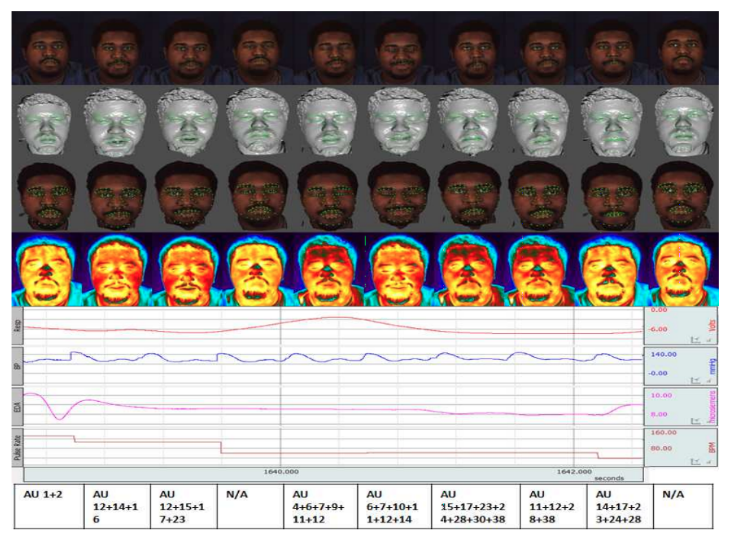

An EEG-Based Multi-Modal Emotion Database with Both Posed and Authentic Facial Actions for Emotion Analysis

15th IEEE International Conference on Automatic Face and Gesture Recognition (FG), 2020

Xiaotian Li, Xiang Zhang,Huiyuan Yang ,Wenna Duan, Weiying Dai, Lijun Yin.

Paper

BU-EEG dataset

2019

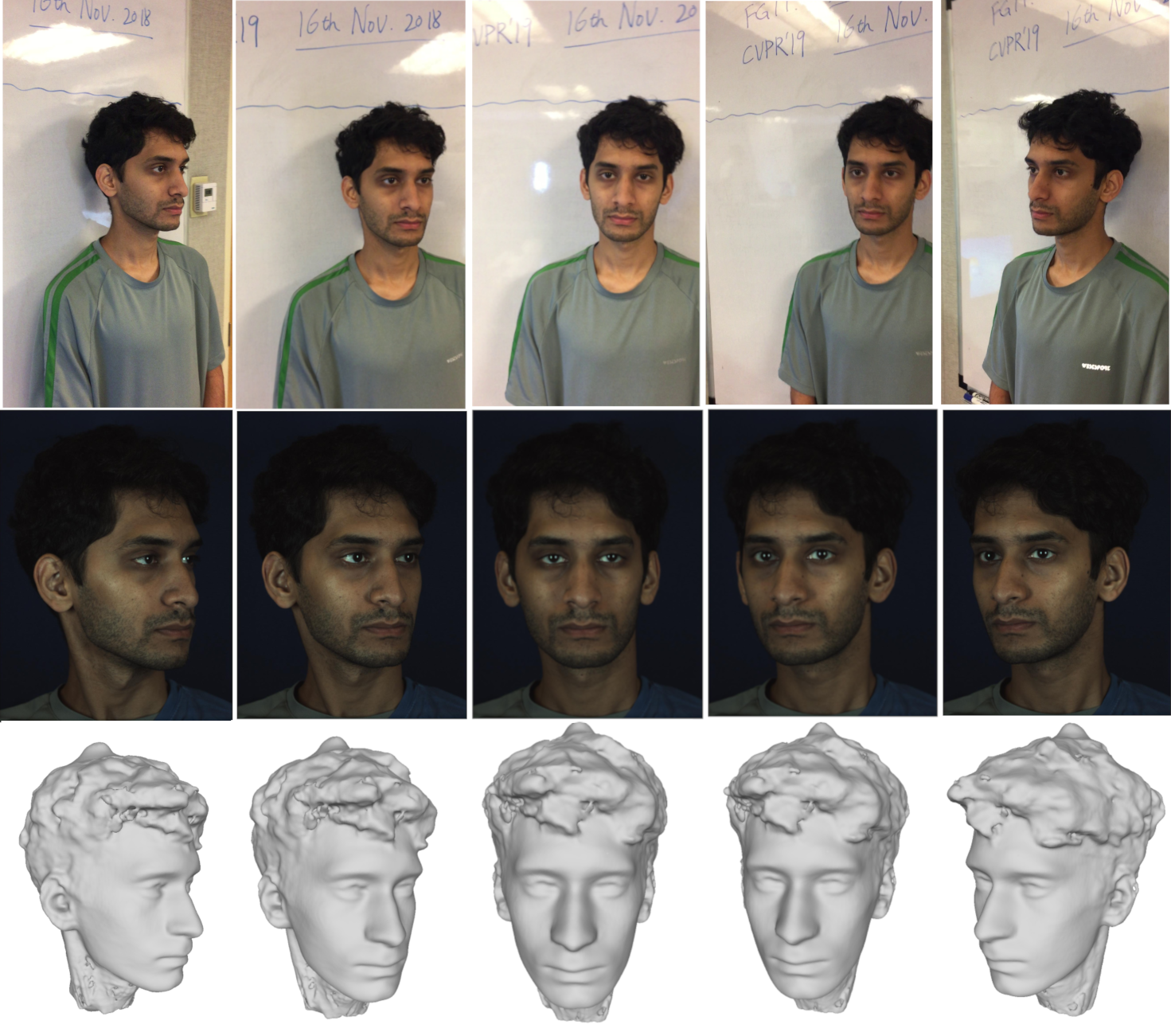

The 2nd 3D Face Alignment in the Wild Challenge (3DFAW-Video): Dense Reconstruction From Video

IEEE/CVF International Conference on Computer Vision Workshop(ICCVW), 2019

Rohith Pillai, Laszlo Jeni, Huiyuan Yang, Zheng Zhang, Lijun Yin, Jeffrey F Cohn

Paper

Project Page

Learning Temporal Information From A Single Image for AU Detection.

14th IEEE International Conference on Automatic Face and Gesture Recognition(FG), 2019 [Oral]

Huiyuan Yang, Lijun Yin

Paper

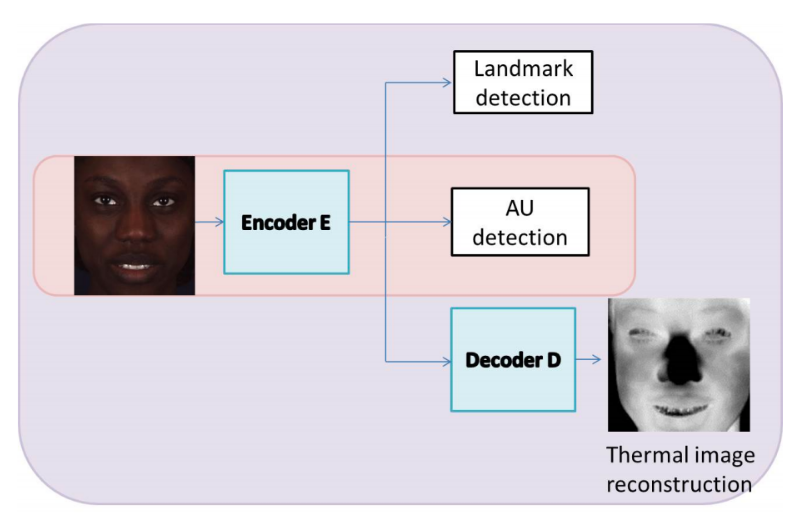

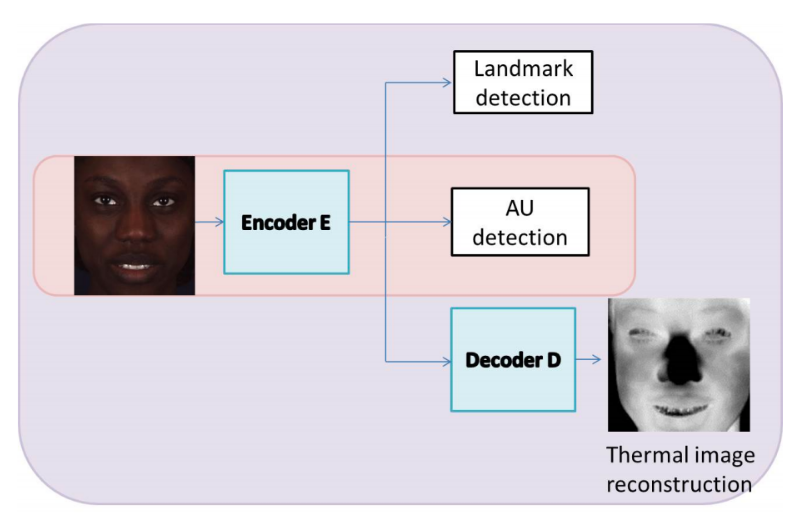

Multi-modality Empowered Network for Facial Action Unit Detection

2019 IEEE Winter Conference on Applications of Computer Vision (WACV), 2019

Peng Liu, Zheng Zhang, Huiyuan Yang and Lijun Yin

Paper

2018

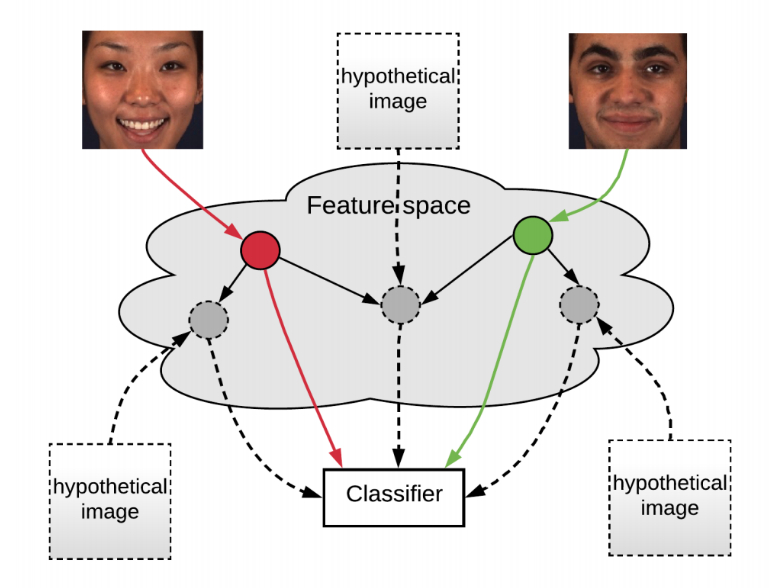

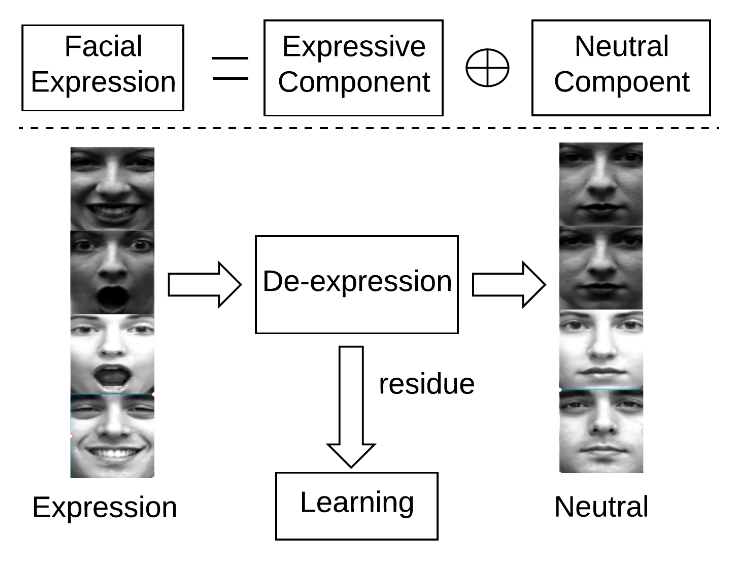

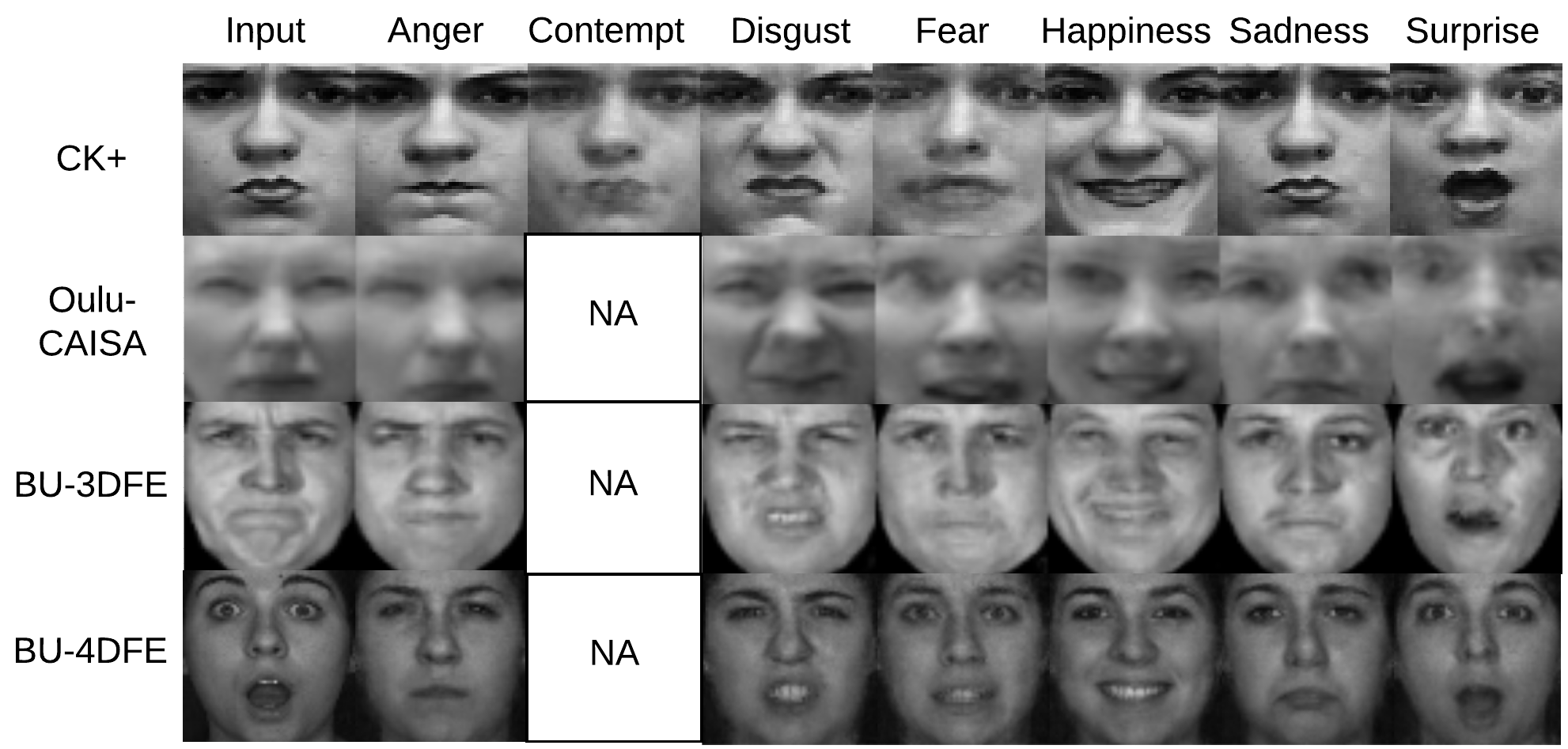

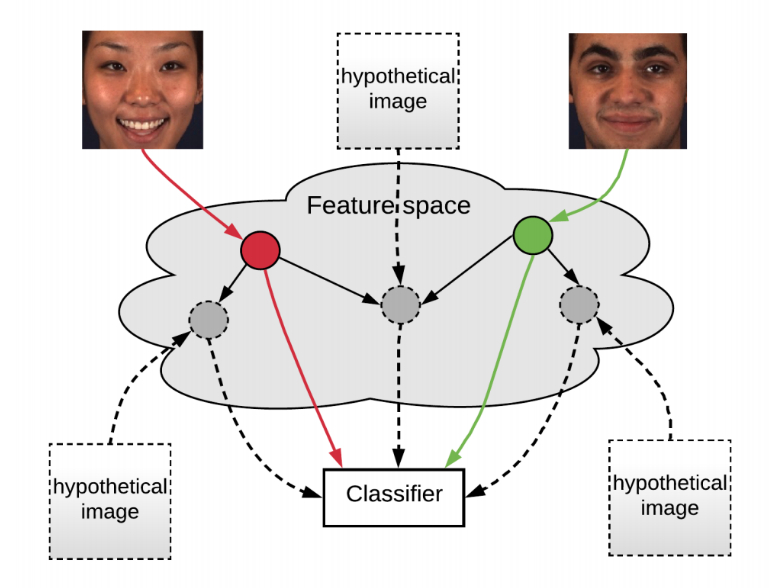

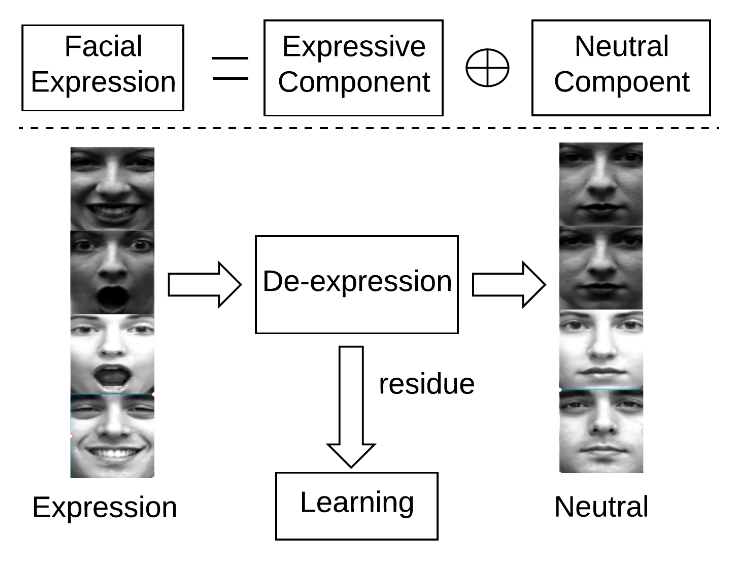

Facial Expression Recognition by De-expression Residue Learning

the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018

Huiyuan Yang, Umur Ciftci and Lijun Yin.

Paper

Identity-Adaptive Facial Expression Recognition Through Expression Regeneration Using Conditional Generative Adversarial Networks

13th IEEE International Conference on Automatic Face and Gesture Recognition (FG), 2018

Huiyuan Yang, Zheng Zhang and Lijun Yin.

Paper

2017

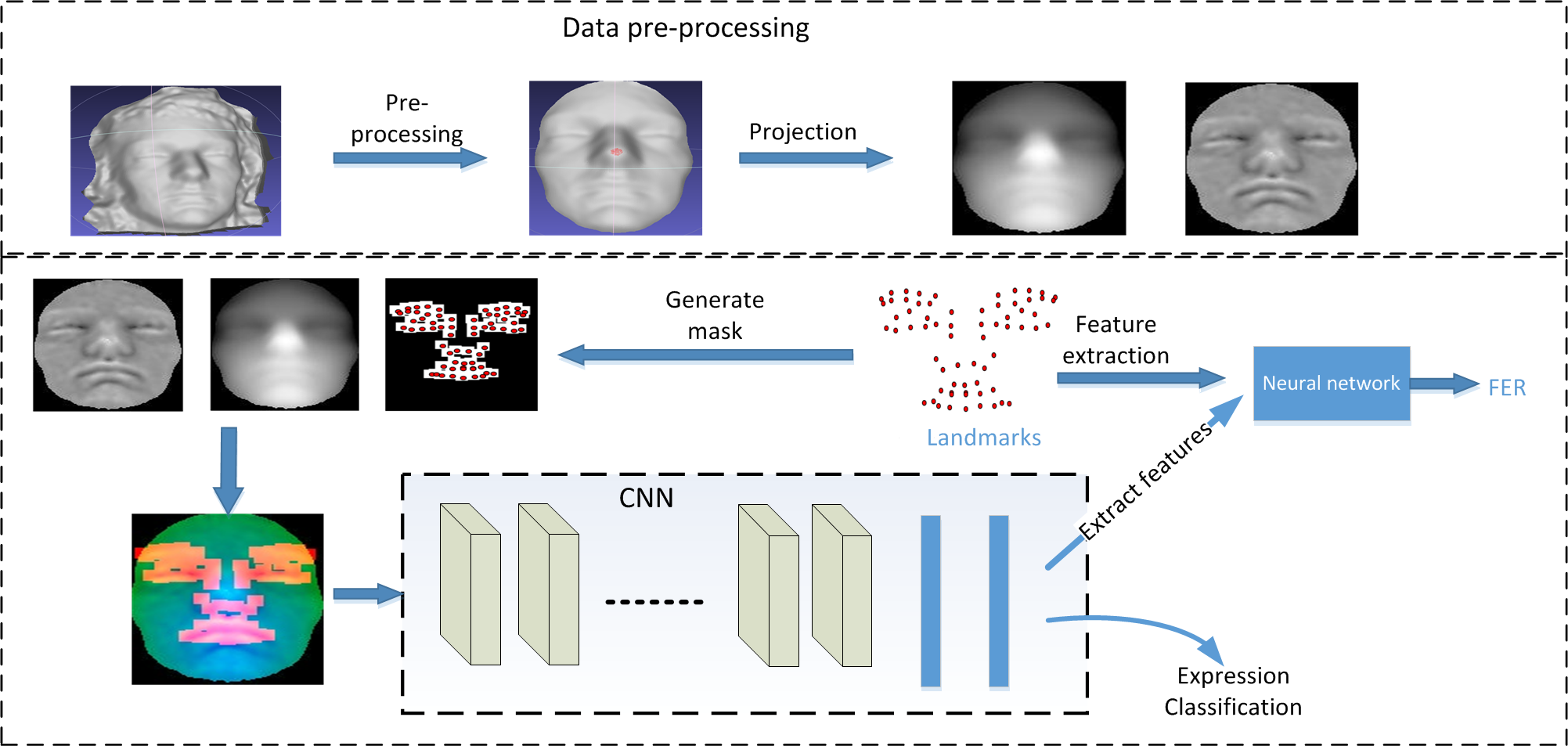

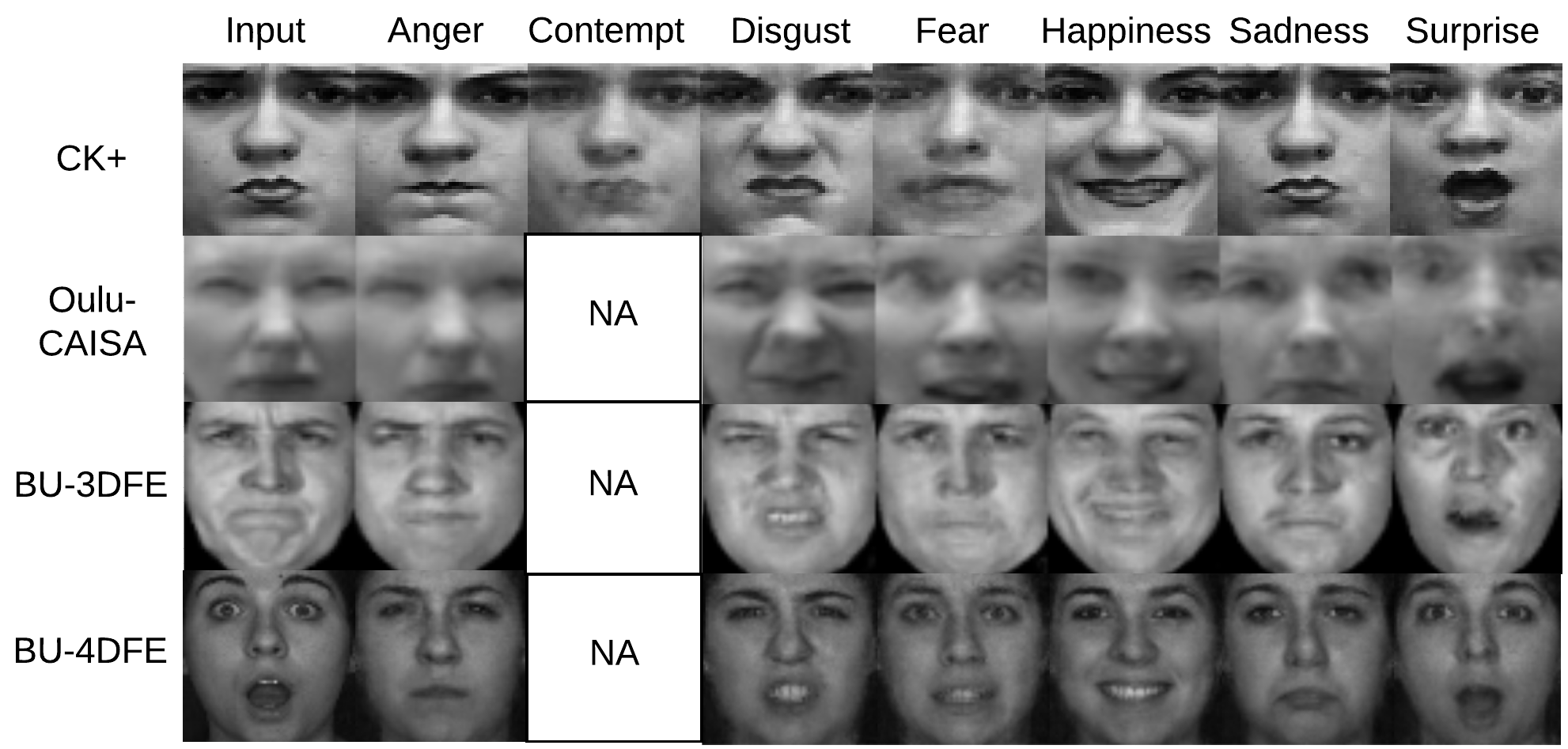

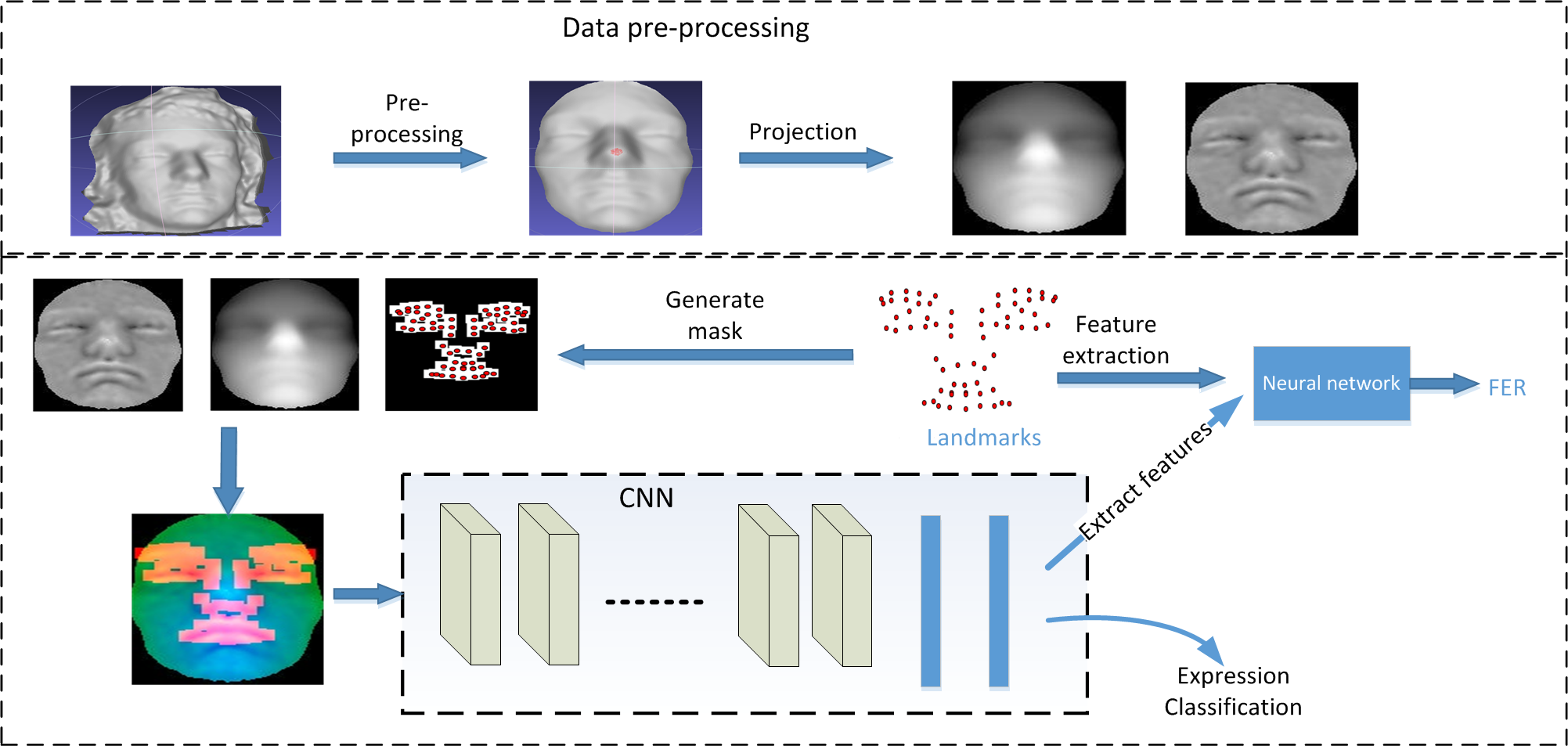

CNN based 3D facial expression recognition using masking and landmark features

Seventh International Conference on Affective Computing and Intelligent Interaction (ACII), 2017

Huiyuan Yang, and Lijun Yin.

Paper

2016

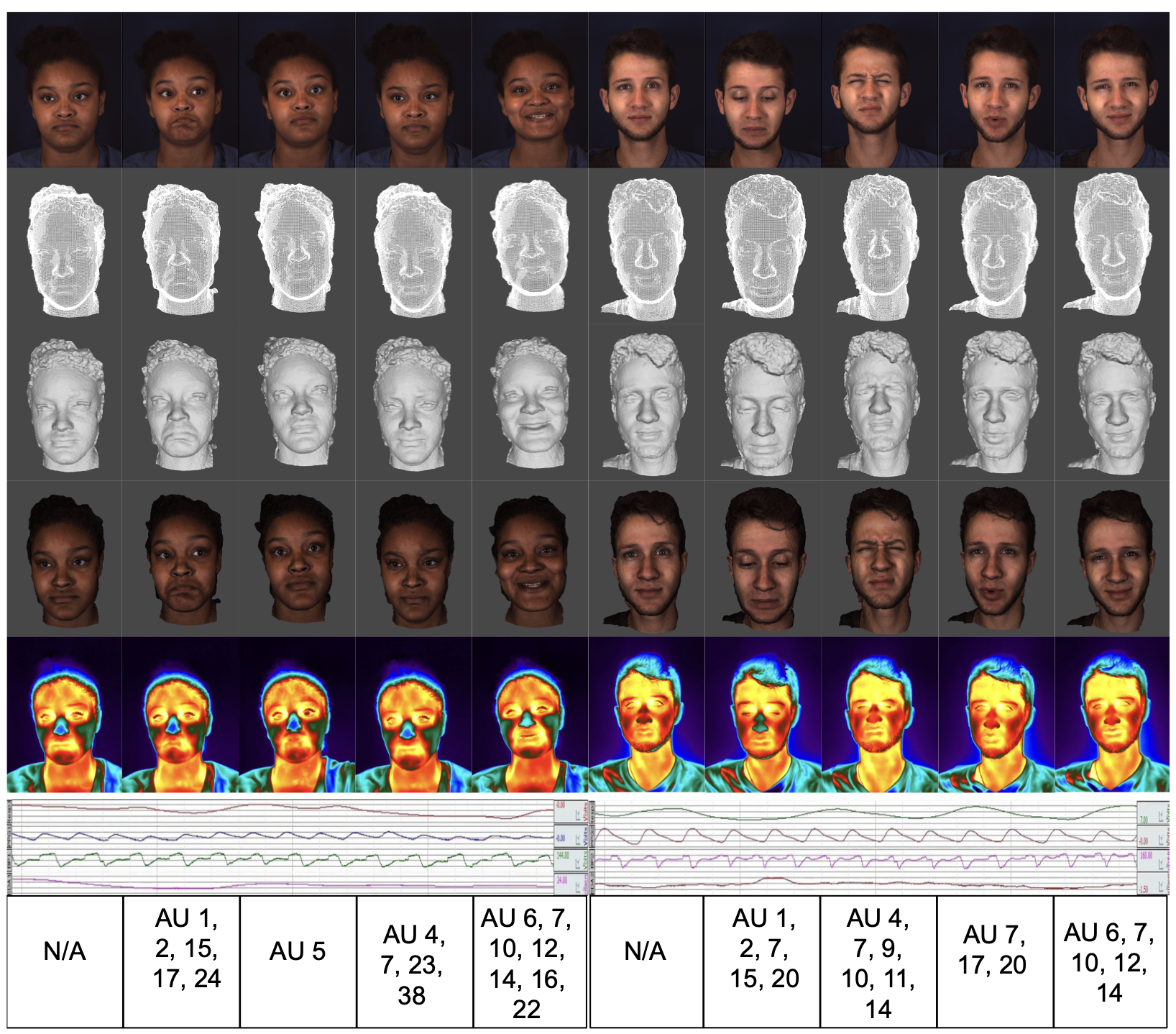

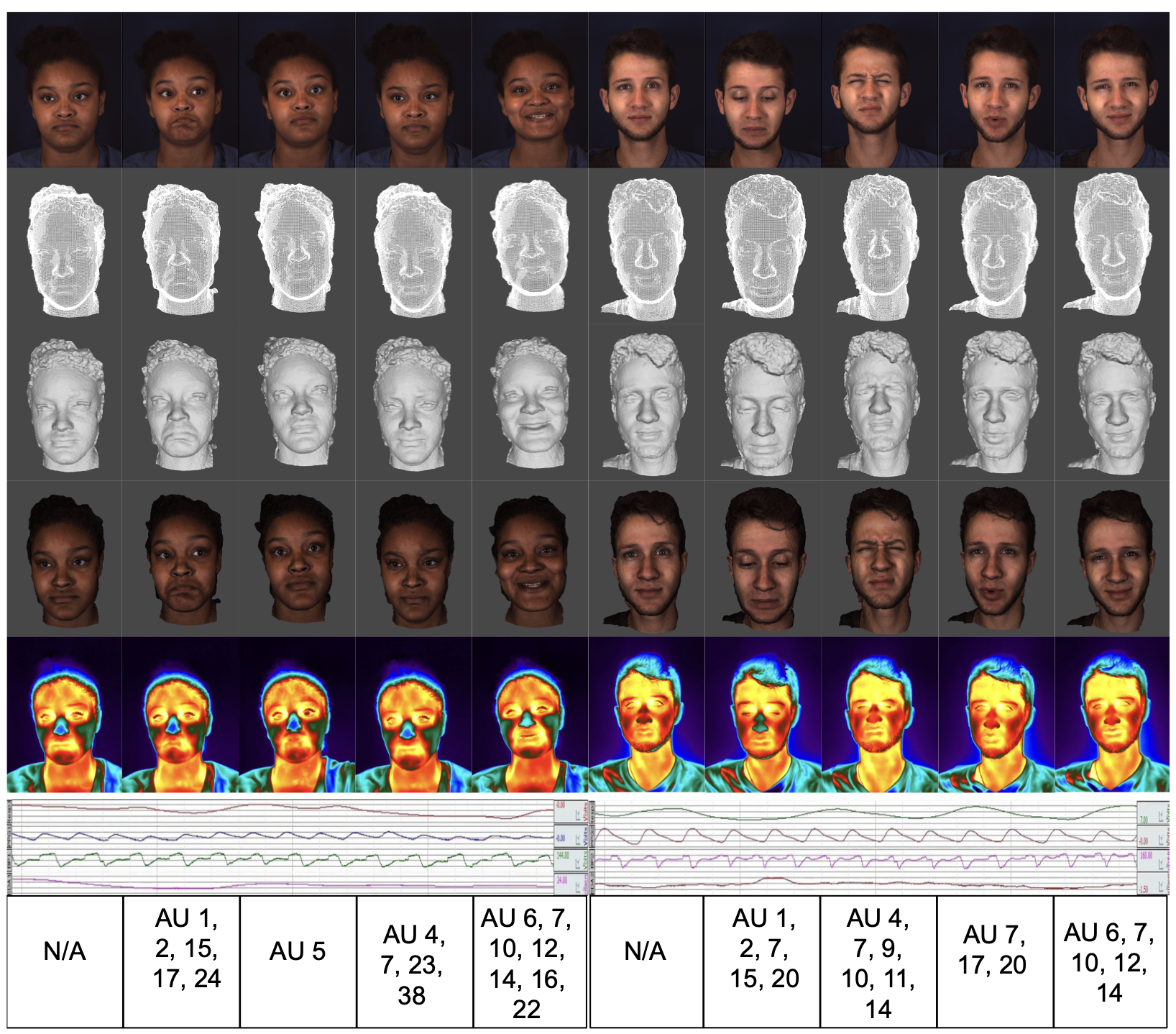

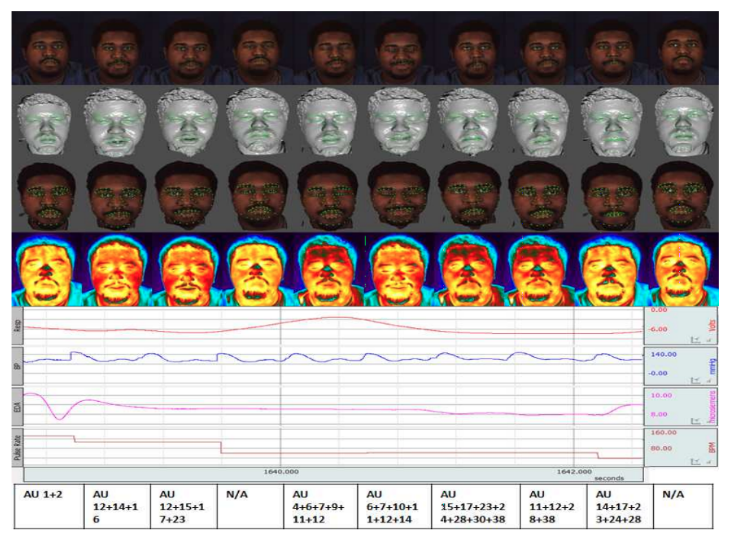

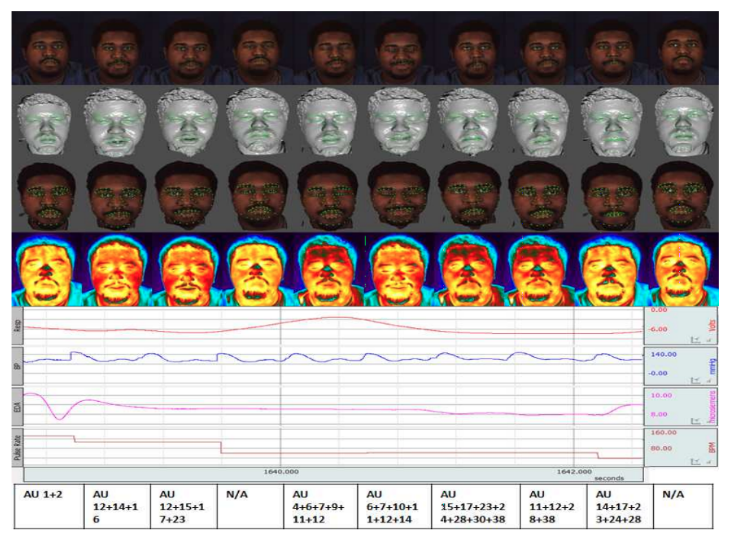

Multimodal spontaneous emotion corpus for human behavior analysis

the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016

Zhang Z, Girard JM, Wu Y, Zhang X, Liu P, Ciftci U, Canavan S, Reale M, Horowitz A, Yang H , Cohn JF.

Paper

BP4D+ dataset

Released Datasets

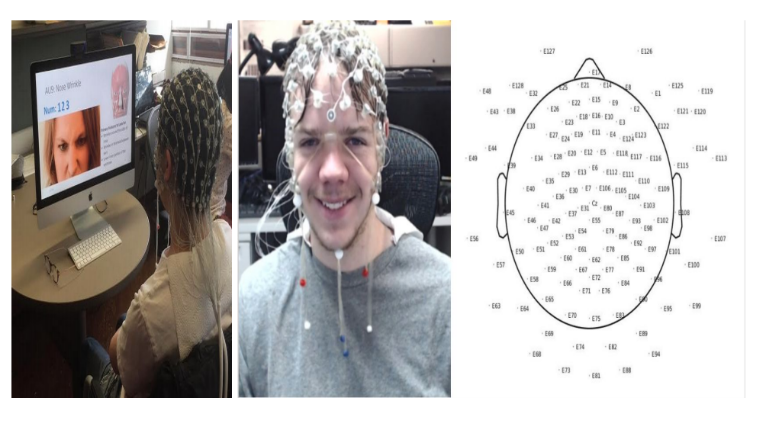

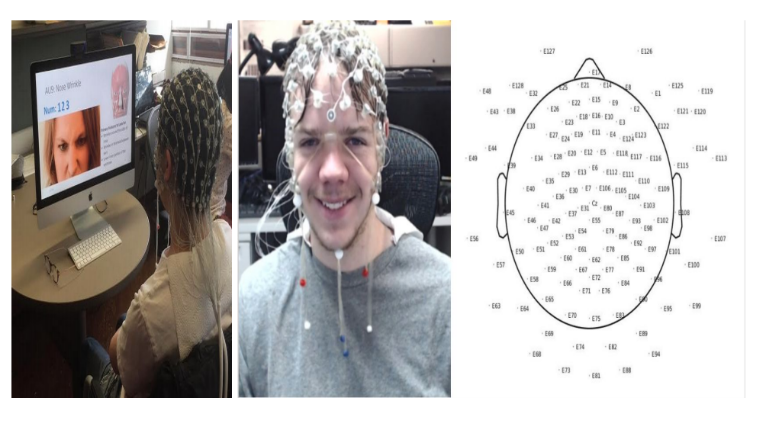

BU-EEG

(2020) [Link]

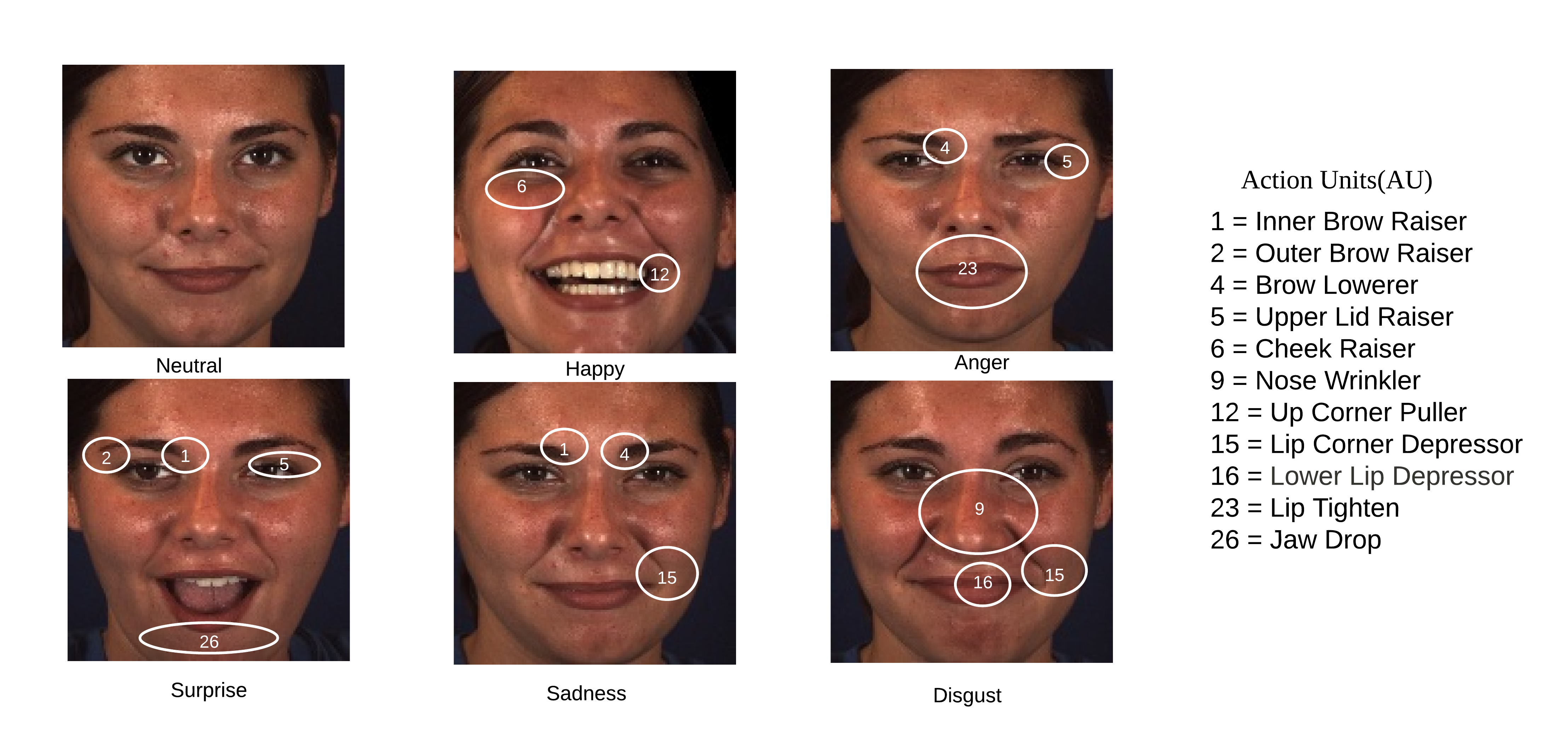

An EEG-Based Multi-Modal Emotion Database.

BU-EEG database records both the 128-channel EEG signals and face videos, including posed expressions,

facial action units and spontaneous expressions from 29 participants with different ages, genders,

ethnic backgrounds. BU-EEG features the correspondence between EEG and individual AU, which is the

first of this kind. A total of 2,320 experiment trails were recorded and released to the public.

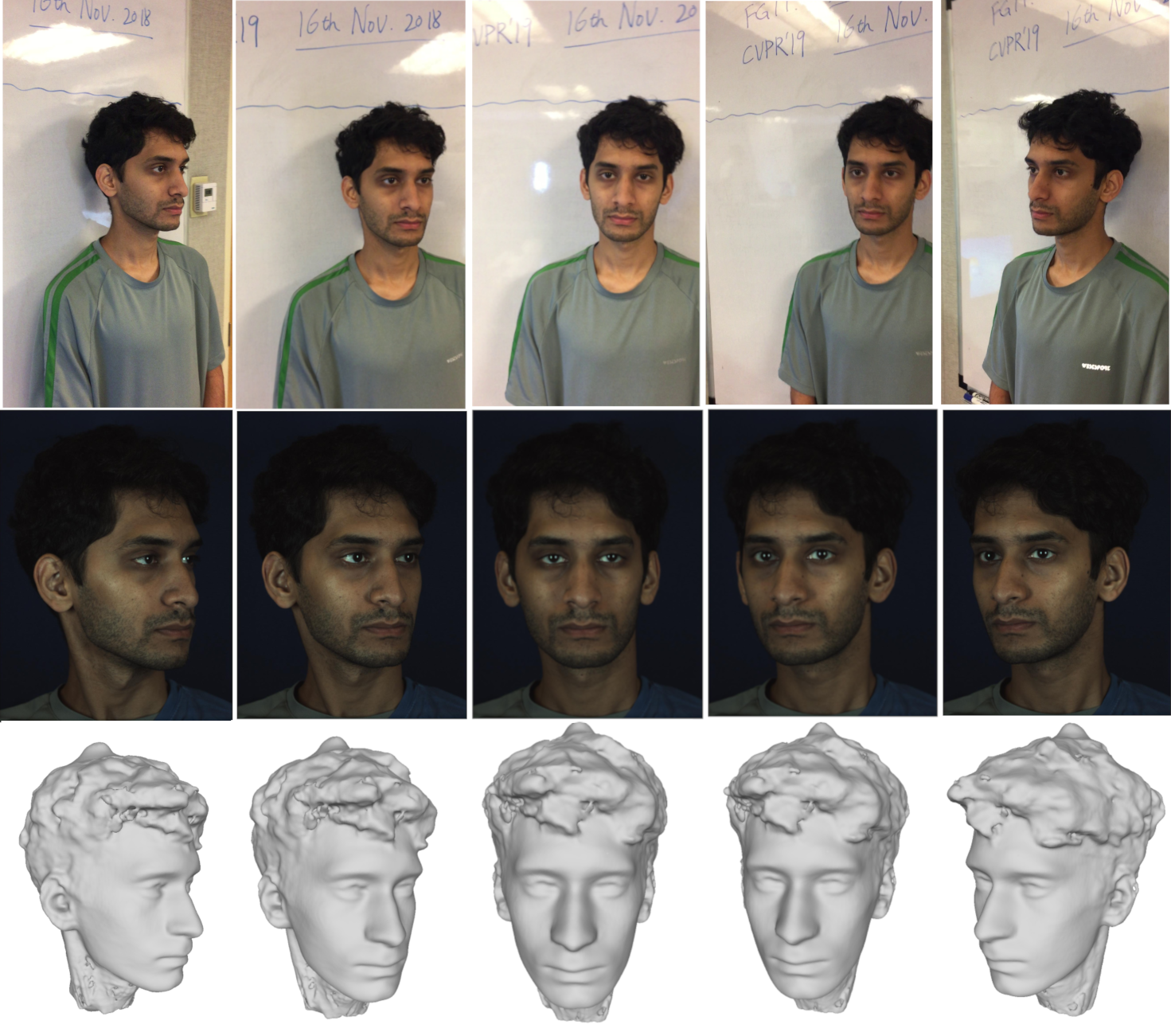

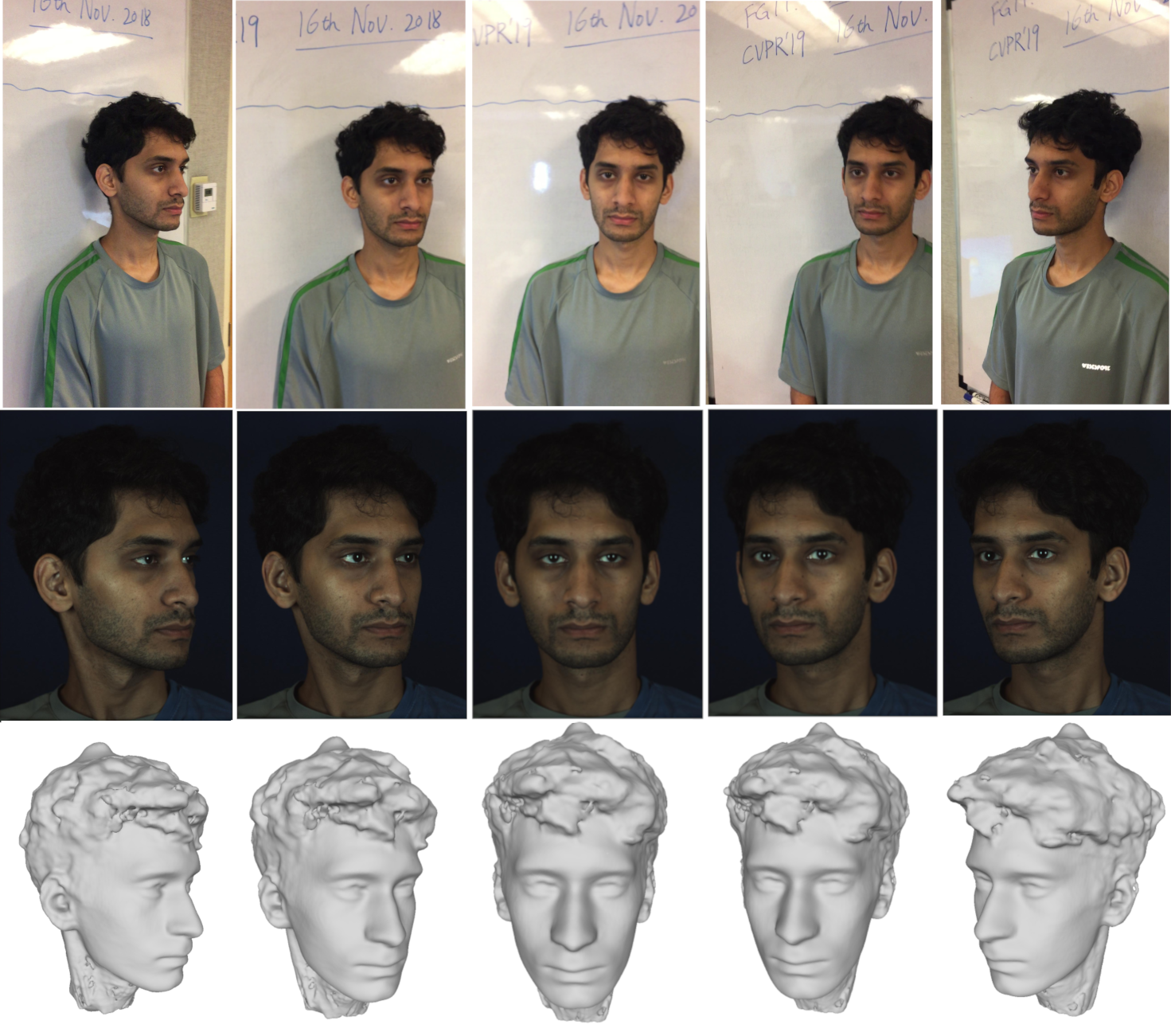

3DFAW

(2019) [Link]

3D Face Alignment in the Wild Challenge

3DFAW dataset contains 3 different components for each of the 70 different subjects:

1) a high resolution 2D video; 2) high resolution 3D ground truth mesh model;

3) unconstrained 2D video from an iPhone. The goal of this dataset is to

facilitate the research on 3D face alignment in the wild, and also to be used

as benchmark for dense 3D face reconstruction evaluation. The dataset is

publicly accessible to the research community.

BP4D+

(2016) [Link]

Multimodal Spontaneous Emotion database

P4D+ is a Multimodal Spontaneous Emotion Corpus, which contains multimodal datasets

including synchronized 3D, 2D, thermal, physiological data sequences (e.g., heart

rate, blood pressure, skin conductance (EDA), and respiration rate), and meta-data

(facial features and FACS codes). The dataset was collected from 140 subjects

(58 males and 82 females) with diverse age and ethnicity. This results in a

dataset with more than 10TB high quality data for the research community.